适用于 Google Ads n-gram 的免费 Python 脚本

已发表: 2022-04-12N-gram 可以成为分析 Google Ads 或 SEO 上的搜索查询的重要武器。 因此,我们制作了一个免费的 Python 脚本来帮助您分析产品提要和搜索查询中任意长度的 n-gram。 我们将解释什么是 n-gram,以及如何使用 n-gram 来优化您的 Google Ads,尤其是针对 Google Shopping。 最后,我们将向您展示如何使用我们的免费 n-gram 脚本来改善您的 Google Ads 结果。

什么是 n-gram?

N-gram 是从较长的文本中提取的 N 个单词的短语。 这里的“N”可以替换为任意数字。

例如,在“the cat jumped on the mat”这样的句子中,“cat jumped”或“the mat”都是 2-grams(或“bi-grams”)。

“The cat jumped”或“cat jumped on”都是这句话中 3-grams(或“tri-grams”)的例子。

n-gram 如何帮助搜索查询

N-gram 在分析 Google Ads 中的搜索查询时很有用,因为某些关键短语可以出现在许多不同的搜索查询中。

N-gram 让我们可以分析这些短语对您整个库存的影响。 因此,它们可以让您在规模上做出更好的决策和优化。

它甚至可以让我们理解单个单词的影响。 例如,如果您发现包含“免费”(“1-gram”)一词的搜索结果不佳,您可能会决定从所有广告系列中排除该词。

或者,通过包含“个性化”的搜索查询的强劲表现可能会鼓励您建立专门的广告系列。

N-gram 对于查看来自 Google 购物的搜索查询特别有用。

产品详情广告关键字定位的自动化特性意味着您可以针对数十万个搜索查询进行展示。 特别是当您拥有大量具有非常特定功能的产品变体时。

我们的 N-gram 脚本可让您从杂乱无章的地方切入重要的短语。

使用 n-gram 分析搜索查询

n-gram 的第一个用例是分析搜索查询。

我们用于 Google Ads 的 n-gram python 脚本包含有关如何运行它的完整说明,但是

我们将讨论如何充分利用它。

- 您需要在您的机器上安装 python 才能开始。 如果你不这样做,这很容易。 您首先安装 Anaconda。 然后,打开 Anaconda Prompt 并输入“conda install jupyter lab”。 Jupyter Lab 是您将在其中运行此脚本的环境。

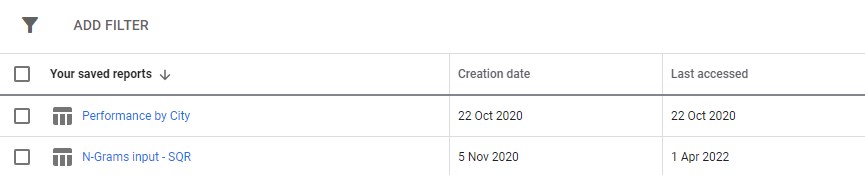

只需从您的 Google Ads 下载搜索查询报告。 我们建议在 Google Ads 的“报告”部分将其设置为自定义报告。 如果您希望跨多个帐户运行此脚本,您甚至可以在 MCC 级别进行设置。

3. 然后,只需更新脚本中的设置以执行您想要的操作,然后运行所有单元格。

运行需要一点时间,但请保持耐心。 您将在底部看到进度更新,因为它发挥了它的魔力。

输出将在您运行脚本的任何文件夹中显示为 excel 文件,我们建议使用下载文件夹。 该文件将使用您定义的任何名称进行标记。

excel 文件的每个选项卡都包含不同的 n-gram 分析。 例如,这是一个二元语法分析:

您可以通过多种方式使用此报告来寻找改进。

您可以从支出最高的短语开始,然后查看 CPA 或 ROAS 的任何异常值。

将您的报告过滤给非转化者也将突出显示支出不足的领域。

如果您在单个单词选项卡中看到较差的转换器,您可以轻松检查在 3-Gram 选项卡中使用的单词的上下文。

例如,在专业培训帐户中,我们可能会发现“Oxford”始终表现不佳。 3-Gram 选项卡很快表明用户可能正在寻找更正式的、面向大学的课程。

因此,您可以快速否定这个词。

最终,请使用此报告,但它最适合您。

使用 n-gram 优化产品提要

我们用于 Google Ads 的第二个 n-gram 脚本会分析您的产品效果。

同样,您可以在脚本本身中找到完整的说明

此脚本查看产品标题中短语的表现。 毕竟,产品标题在很大程度上决定了你出现的关键词。 标题也是您的主要广告文案。 因此,标题非常重要。

该脚本旨在帮助您在这些标题中找到潜在的短语,您可以对其进行调整以提高您在 Google 购物中的表现。

正如你在上面看到的,这个脚本的输出有点不同。 由于 Google 报告 API 的限制,您在产品标题级别时无法访问转化指标(如收入)。

因此,此报告为您提供产品数量、平均流量和产品短语的传播(标准偏差)。

因为它基于可见性,所以您可以使用该报告来识别可能使您的产品更加可见的短语。 然后,您可以将这些添加到您的更多产品标题中。

例如,我们可能会发现一个平均展示次数非常多且出现在多个产品标题中的短语。 它的标准偏差也很低——这意味着这里不可能只有一种令人惊叹的产品会扭曲我们的数据。

我们使用此工具极大地扩大了客户的购物知名度。 客户销售了数千种不同尺寸的产品。 例如 8x10 毫米。 人们也在购买他们需要的非常具体的尺寸。

但是,谷歌在理解这种大小的不同可能的命名约定方面很糟糕:像 8 毫米 x 10 毫米、8 x 10 毫米、8 毫米 x 10 毫米这样的搜索都被视为几乎完全不同的搜索查询。

因此,我们使用我们的 n-gram 脚本来确定这些命名约定中的哪一个为我们的产品提供了最佳可见性。

我们找到了最佳匹配项并对我们的产品 Feed 进行了更改。 结果,客户购物活动的流量在一个月内猛增了550%以上。

产品命名很重要,n-gram 可以帮助您。

Google Ads 的 N-gram 脚本

这是两个完整的脚本。

用于 Google Ads 搜索查询的 N-gram 脚本

#!/usr/bin/env python #coding: utf-8 # Ayima N-Grams Script for Google Ads #Takes in SQR data and outputs data split into phrases of N length #Script produced by Ayima Limited (www.ayima.com) # 2022. This work is licensed under a CC BY SA 4.0 license (https://creativecommons.org/licenses/by-sa/4.0/). #Version 1.1 ### Instructions #Download an SQR from within the reports section of Google ads. #The report must contain the following metrics: #+ Search term #+ Campaign #+ Campaign state #+ Currency code #+ Impressions #+ Clicks #+ Cost #+ Conversions #+ Conv. value #Then, complete the setting section as instructed and run the whole script #The script may take some time. Ngrams are computationally difficult, particularly for larger accounts. But, give it enough time to run, and it will log if it is running correctly. ### Settings # First, enter the name of the file to run this script on # By default, the script only looks in the folder it is in for the file. So, place this script in your downloads folder for ease. file_name = "N-Grams input - SQR (2).csv" grams_to_run = [1,2,3] #What length phrases you want to retrieve for. Recommended is < 4 max. Longer phrases retrieves far more results. campaigns_to_exclude = "" #input strings contained by campaigns you want to EXCLUDE, separated by commas if multiple (eg "DSA,PLA"). Case Insensitive campaigns_to_include = "PLA" #input strings contained by campaigns you want to INCLUDE, separated by commas if multiple (eg "DSA,PLA"). Case Insensitive character_limit = 3 #minimum number of characters in an ngram eg to filter out "a" or "i". Will filter everything below this limit client_name = "SAMPLE CLIENT" #Client name, to label on the final file enabled_campaigns_only = False #True/False - Whether to only run the script for enabled campaigns impressions_column_name = "Impr." #Google consistently flip between different names for their impressions column. We think just to annoy us. This spelling was correct at time of writing brand_terms = ["BRAND_TERM_2", #Labels brand and non-brand search terms. You can add as many as you want. Case insensitive. If none, leave as [""] "BRAND_TERM_2"] #eg ["Adidas","Addidas"] ## The Script #### You should not need to make changes below here import pandas as pd from nltk import ngrams import numpy as np import time import re def read_file(file_name): #find the file format and import if file_name.strip().split('.')[-1] == "xlsx": return pd.read_excel(f"{file_name}",skiprows = 2, thousands =",") elif file_name.strip().split('.')[-1] == "csv": return pd.read_csv(f"{file_name}",skiprows = 2, thousands =",") df = read_file(file_name) def filter_campaigns(df, to_ex = campaigns_to_exclude,to_inc = campaigns_to_include): to_ex = to_ex.split(",") to_inc = to_inc.split(",") if to_inc != ['']: to_inc = [word.lower() for word in to_inc] df = df[df["Campaign"].str.lower().str.strip().str.contains('|'.join(to_inc))] if to_ex != ['']: to_ex = [word.lower() for word in to_ex] df = df[~df["Campaign"].str.lower().str.strip().str.contains('|'.join(to_ex))] if enabled_campaigns_only: try: df = df[df["Campaign state"].str.contains("Enabled")] except KeyError: print("Couldn't find 'Campaign state' column") return df def generate_ngrams(list_of_terms, n): """ Turns a list of search terms into a set of unique n-grams that appear within """ # Clean the terms up first and remove special characters/commas etc. #clean_terms = [] #for st in list_of_terms: #st = st.strip() #clean_terms.append(re.sub(r'[^a-zA-Z0-9\s]', '', st)) #split into grams unique_ngrams = set() for term in list_of_terms: grams = ngrams(term.split(" "), n) [unique_ngrams.add(gram) for gram in grams] all_grams = set([' '.join(tups) for tups in unique_ngrams]) if character_limit > 0: n_grams_list = [ngram for ngram in all_grams if len(ngram) > character_limit] return n_grams_list def _collect_stats(term): #Slice the dataframe to the terms at hand sliced_df = df[df["Search term"].str.match(fr'. {re.escape(term)}. ')] #add our metrics raw_data =list(np.sum(sliced_df[[impressions_column_name,"Clicks","Cost","Conversions","Conv. value"]])) return raw_data def _generate_metrics(df): #generate metrics try: df["CTR"] = df["Clicks"]/df[f"Impressions"] df["CVR"] = df["Conversions"]/df["Clicks"] df["CPC"] = df["Cost"]/df["Clicks"] df["ROAS"] = df[f"Conv. value"]/df["Cost"] df["CPA"] = df["Cost"]/df["Conversions"] except KeyError: print("Couldn't find a column") #replace infinites with NaN and replace with 0 df.replace([np.inf, -np.inf], np.nan, inplace=True) df.fillna(0, inplace= True) df.round(2) return df def build_ngrams_df(df, sq_column_name ,ngrams_list, character_limit = 0): """Takes in n-grams and df and returns df of those metrics df - dataframe in question sq_column_name - str. Name of the column containing search terms ngrams_list - list/set of unique ngrams, already generated character_limit - Words have to be over a certain length, useful for single word grams Outputs a dataframe """ #set up metrics lists to drop values array = [] raw_data = map(_collect_stats, ngrams_list) #stack into an array data = np.array(list(raw_data)) #turn the above into a dataframe columns = ["Impressions","Clicks","Cost","Conversions", "Conv. value"] ngrams_df = pd.DataFrame(columns= columns, data=data, index = list(ngrams_list), dtype = float) ngrams_ df.sort_values(by = "Cost", ascending = False, inplace = True) #calculate additional metrics and return return _generate_metrics(ngrams_df) def group_by_sqs(df): df = df.groupby("Search term", as_index = False).sum() return df df = group_by_sqs(df) def find_brand_terms(df, brand_terms = brand_terms): brand_terms = [str.lower(term) for term in brand_terms] st_brand_bool = [] for i, row in df.iterrows(): term = row["Search term"] #runs through the term and if any of our brand strings appear, labels the column brand if any([brand_term in term for brand_term in brand_terms]): st_brand_bool.append("Brand") else: st_brand_bool.append("Generic") return st_brand_bool df["Brand label"] = find_brand_terms(df) #This is necessary for larger search volumes to cut down the very outlier terms with extremely few searches i = 1 while len(df)> 40000: print(f"DF too long, at {len(df)} rows, filtering to impressions greater than {i}") df = df[df[impressions_column_name] > i] i+=1 writer = pd.ExcelWriter(f"Ayima N-Gram Script Output - {client_name} N-Grams.xlsx", engine='xlsxwriter') df.to_excel(writer, sheet_name='Raw Searches') for n in grams_to_run: print("Working on ",n, "-grams") n_grams = generate_ngrams(df["Search term"], n) print(f"Found {len(n_grams)} n_grams, building stats (may take some time)") n_gram_df = build_ngrams_df(df,"Search term", n_grams, character_limit) print("Adding to file") n_gram_df.to_excel(writer, sheet_name=f'{n}-Gram',) writer.close()用于分析产品提要的 N-gram 脚本

#!/usr/bin/env python #coding: utf-8 # N-Grams for Google Shopping #This script looks to find patterns and trends in product feed data for Google shopping. It will help you find what words and phrases have been performing best for you #Script produced by Ayima Limited (www.ayima.com) # 2022. This work is licensed under a CC BY SA 4.0 license (https://creativecommons.org/licenses/by-sa/4.0/). #Version 1.1 ## Instructions #Download an SQR from within the reports section of Google ads. #The report must contain the following metrics: #+ Item ID #+ Product title #+ Impressions #+ Clicks #Then, complete the setting section as instructed and run the whole script #The script may take some time. Ngrams are computationally difficult, particularly for larger accounts. But, give it enough time to run, and it will log if it is running correctly. ## Settings #First, enter the name of the file to run this script on #By default, the script only looks in the folder it is in for the file. So, place this script in your downloads folder for ease. file_name = "Product report for N-Grams (1).csv" grams_to_run = [1,2,3] #The number of words in a phrase to run this for (eg 3 = three-word phrases only) character_limit = 0 #Words have to be over a certain length, useful for single word grams to rule out tiny words/symbols title_column_name = "Product Title" #Name of our product title column desc_column_name = "" #Name of our product description column, if we have one file_label = "Sample Client Name" #The label you want to add to the output file (eg the client name, or the run date) impressions_column_name = "Impr." #Google consistently flip between different names for their impressions column. We think just to annoy us. This spelling was correct at time of writing client_name = "SAMPLE" ## The Script #### You should not need to make changes below here #First import all of the relevant modules/packages import pandas as pd from nltk import ngrams import numpy as np import time import re #import our data file def read_file(file_name): #find the file format and import if file_name.strip().split('.')[-1] == "xlsx": return pd.read_excel(f"{file_name}",skiprows = 2, thousands =",") elif file_name.strip().split('.')[-1] == "csv": return pd.read_csv(f"{file_name}",skiprows = 2, thousands =",")# df = read_file(file_name) df.head() def generate_ngrams(list_of_terms, n): """ Turns our list of product titles into a set of unique n-grams that appear within """ unique_ngrams = set() for term in list_of_terms: grams = ngrams(term.split(" "), n) [unique_ngrams.add(gram) for gram in grams if ' ' not in gram] ngrams_list = set([' '.join(tups) for tups in unique_ngrams]) return ngrams_list def _collect_stats(term): #Slice the dataframe to the terms at hand sliced_df = df[df[title_column_name].str.match(fr'. {re.escape(term)}. ')] #add our metrics raw_data = [len(sliced_df), #number of products np.sum(sliced_df[impressions_column_name]), #total impressions np.sum(sliced_df["Clicks"]), #total clicks np.mean(sliced_df[impressions_column_name]), #average number of impressions np.mean(sliced_df["Clicks"]), #average number of clicks np.median(sliced_df[impressions_column_name]), #median impressions np.std(sliced_df[impressions_column_name])] #standard deviation return raw_data def build_ngrams_df(df, title_column_name, ngrams_list, character_limit = 0, desc_column_name = ''): """ Takes in n-grams and df and returns df of those metrics df - our dataframe title_column_name - str. Name of the column containing product titles ngrams_list - list/set of unique ngrams, already generated character_limit - Words have to be over a certain length, useful for single word grams to rule out tiny words/symbols desc_column_name = str. Name of the column containing product descriptions, if applicable Outputs a dataframe """ #first cut it to only grams longer than our minimum if character_limit > 0: ngrams_list = [ngram for ngram in ngrams_list if len(ngram) > character_limit] raw_data = map(_collect_stats, ngrams_list) #stack into an array data = np.array(list(raw_data)) #data[np.isnan(data)] = 0 columns = ["Product Count","Impressions","Clicks","Avg. Impressions", "Avg. Clicks","Median Impressions","Spread (Standard Deviation)"] #turn the above into a dataframe ngrams_df = pd.DataFrame(columns= columns, data=data, index = list(ngrams_list)) #clean the dataframe and add other key metrics ngrams_df["CTR"] = ngrams_df["Clicks"]/ ngrams_df["Impressions"] ngrams_df.fillna(0, inplace=True) ngrams_df.round(2) ngrams_df[["Impressions","Clicks","Product Count"]] = ngrams_df[["Impressions","Clicks","Product Count"]].astype(int) ngrams_df.sort_values(by = "Avg. Impressions", ascending = False, inplace = True) return ngrams_df writer = pd.ExcelWriter(f"{client_name} Product Feed N-Grams.xlsx", engine='xlsxwriter') start_time = time.time() for n in grams_to_run: print("Working on ",n, "-grams") n_grams = generate_ngrams(df["Product Title"], n) print(f"Found {len(n_grams)} n_grams, building stats (may take some time)") n_gram_df = build_ngrams_df(df,"Product Title", n_grams, character_limit) print("Adding to file") n_gram_df.to_excel(writer, sheet_name=f'{n}-Gram',) time_taken = time.time() - start_time print("Took "+str(time_taken) + " Seconds") writer.close()您还可以在 github 上找到 n-gram 脚本的更新版本以进行下载。

这些脚本在 Creative Commons BY SA 4.0 许可下获得许可,这意味着您可以自由分享和改编它,但我们鼓励您始终免费分享您的改进版本供所有人使用。 N-gram 太有用了,不能分享。

这只是我们向 Ayima Insights Club 成员提供的众多免费工具、脚本和模板之一。 您可以了解有关 Insights Club 的更多信息,并在此处免费注册。